Kimi K2: Moonshot AI’s Open-Source AI Revolution

Unleash the Power of Kimi K2: Open-Source AI Redefining Coding and Intelligence

Hey! I’m Kimi—drop a file or question, and I’m on it!

Summarize this PDF file, please.

Can you analyze this link?

What does this Excel sheet show?

Help me fact-check this article.

relatedTools.title

DeepSeek V3.1: Advanced Open Source AI Model

Create Cinematic AI Videos with Wan 2.2: A Free Open-Source MoE Model from Alibaba’s Tongyi Lab

Free 4o Image API by Kie – AI Image Generation Powered by GPT-4o

Free YouTube Title Generator - YesChat.ai: SEO-Optimized Titles

Free Llama 3 AI Chat | Advanced Features & Benefits

Free Brand Name Generator - YesChat.ai

Free Photo to Video Converter | YesChat AI - Fast & Creative Video Generation

Free Punctuation Checker by Yeschat AI – Perfect Your Writing Now

What is Kimi K2?

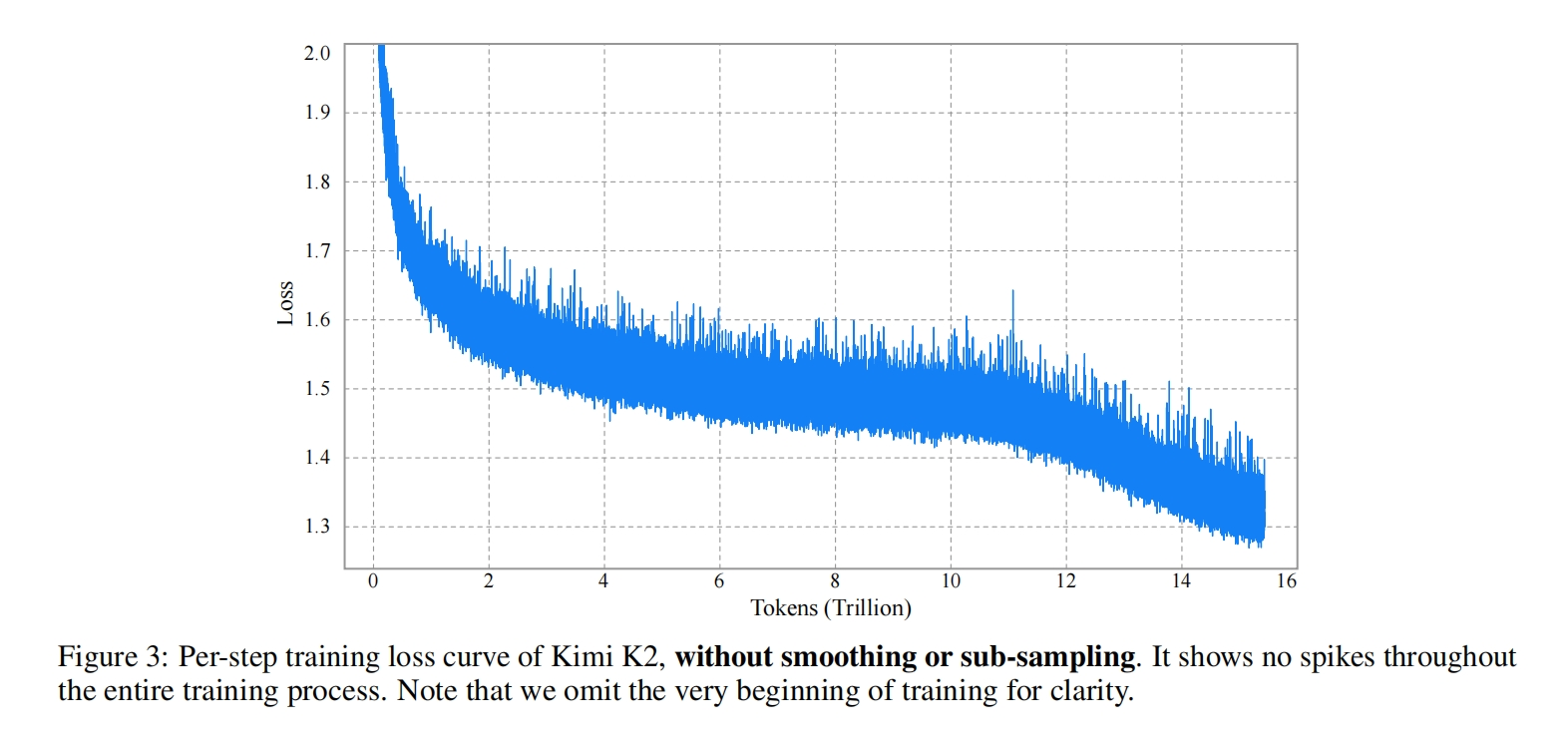

Kimi K2 is an advanced Mixture of Experts (MoE) language model launched by Moonshot AI, designed to push the boundaries of artificial intelligence. With 1 trillion total parameters, of which 32 billion are active during inference, and pre-trained on 15.5 trillion tokens using the innovative MuonClip optimizer, Kimi K2 achieves zero training instability. This Kimi AI model excels in coding, reasoning, and agent tasks.

What’s New Compared to Kimi 1.5

Architecture Upgrade:

Kimi K2 uses a Mixture of Experts (MoE) architecture, while Kimi 1.5 relies on a multimodal reinforcement learning (RL) framework.

Parameter Scale:

Kimi K2 has 1 trillion total parameters, significantly larger than Kimi 1.5 (exact parameter count undisclosed but presumed smaller).

Context Window:

Kimi K2 extends the context window to 2 million tokens from Kimi 1.5’s 128k tokens.

Training Data:

Kimi K2 was pre-trained on 15.5 trillion tokens, far exceeding Kimi 1.5’s training data.

Kimi K2’s Core Capabilities

Exceptional Performance:

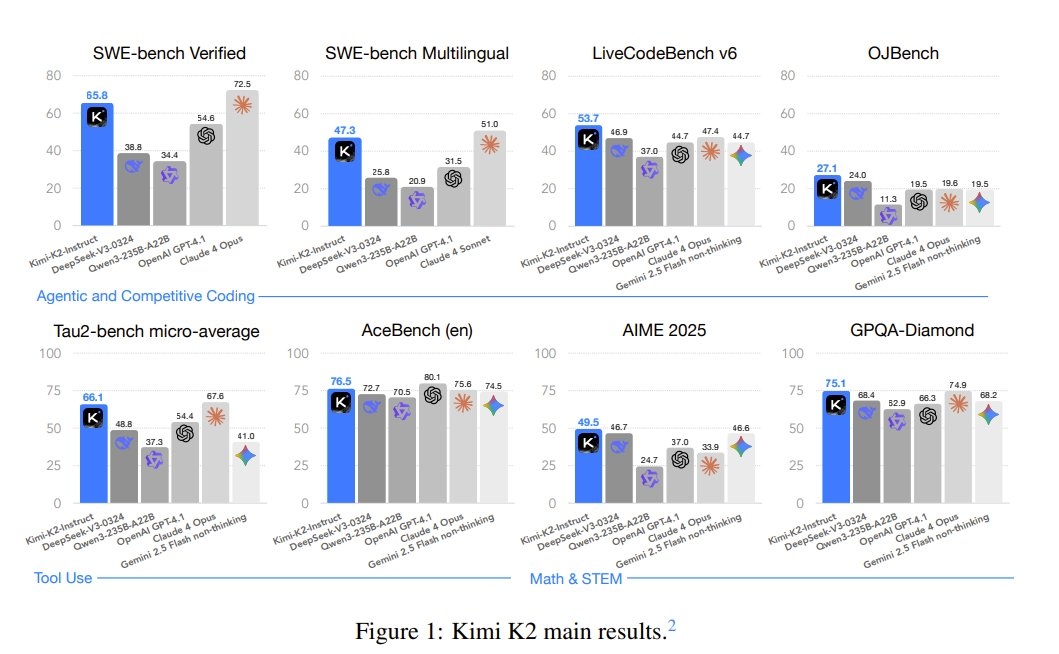

Outperforms Deepseek AI, Qwen 2.5, and some proprietary models in benchmarks like SWE-bench Verified (65.8), AIME 2025, and GPQA Diamond (75.1).

Open-Source Availability:

Fully open-source model weights and training processes, accessible via Hugging Face for research and customization.

Extended Context Window:

Supports up to 2 million tokens, perfect for processing long documents and complex tasks.

Multi-Domain Expertise:

Excels in coding, mathematics, STEM, and general knowledge reasoning.

Pricing Comparison

Kimi K2 - Matches Claude 4's performance at 80% lower cost.

| Model | Price (per 1M tokens) (input / output) | Flagship Coding Benchmarks (higher = better) |

|---|---|---|

| Kimi K2 | $0.60 / $2.50 | LiveCodeBench 53.7 |

| Claude 4 Sonnet | $3.00 / $15.00 | LiveCodeBench 48.5 |

| GPT-4.1 | $2.00 / $8.00 | LiveCodeBench 44.7 |

| DeepSeek-V3-0324 | $0.12/ $0.20 | LiveCodeBench 46.9 |

| Qwen3-235B-A22B (Non-thinking) | $0.28/$1.14 | LiveCodeBench 37.0 |

Doubts About “Kimi K2 Adopts DeepSeek V3 Architecture”

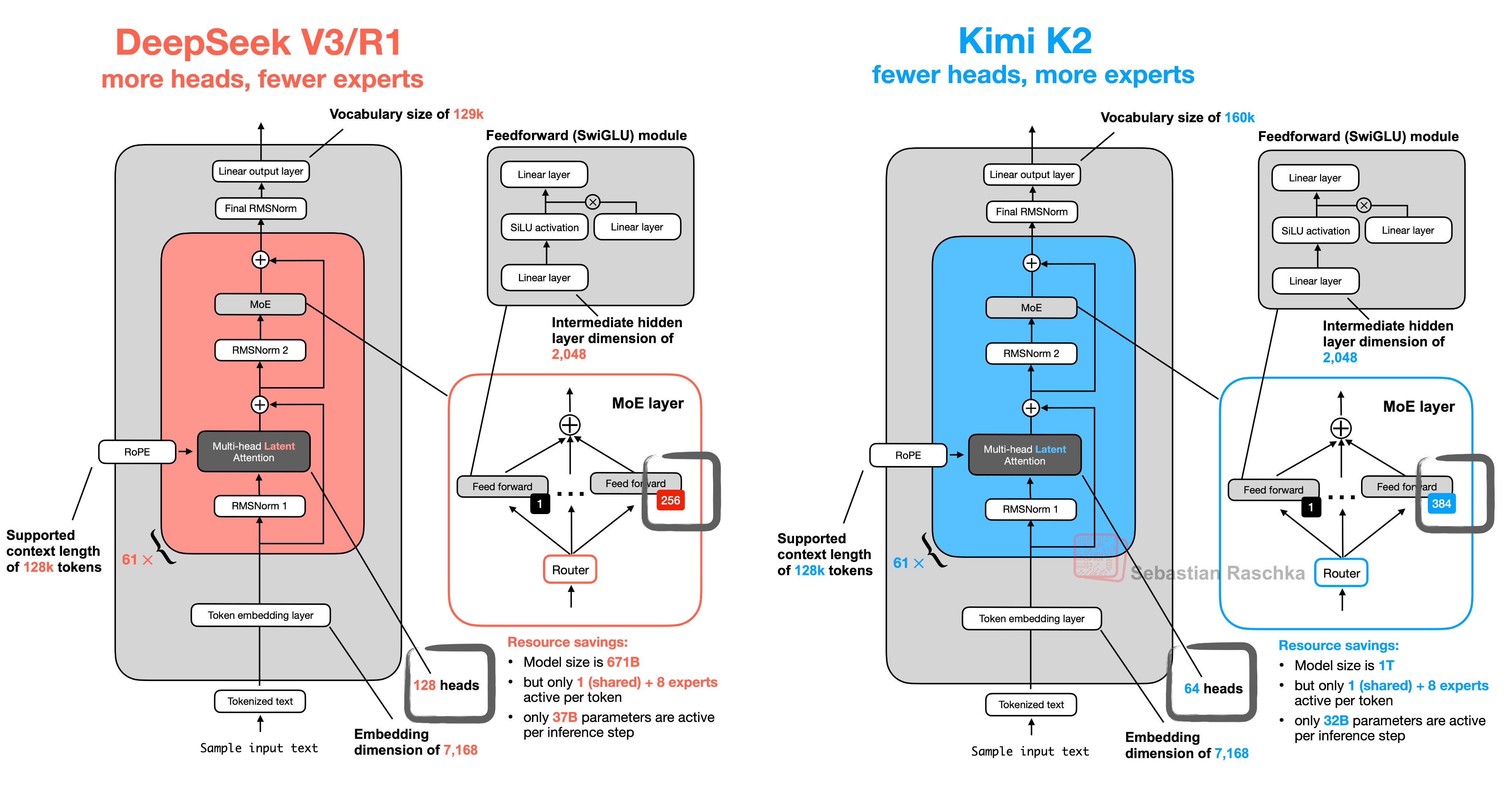

Kimi K2 is an extended version of DeepSeek V3, featuring more experts (384 vs. DeepSeek V3’s 256) and an optimized multi-head latent attention (MLA) mechanism, scaling to 1 trillion parameters. This extension brings its performance closer to proprietary models like Gemini and ChatGPT while retaining open-source advantages.

Use Cases of Kimi K2

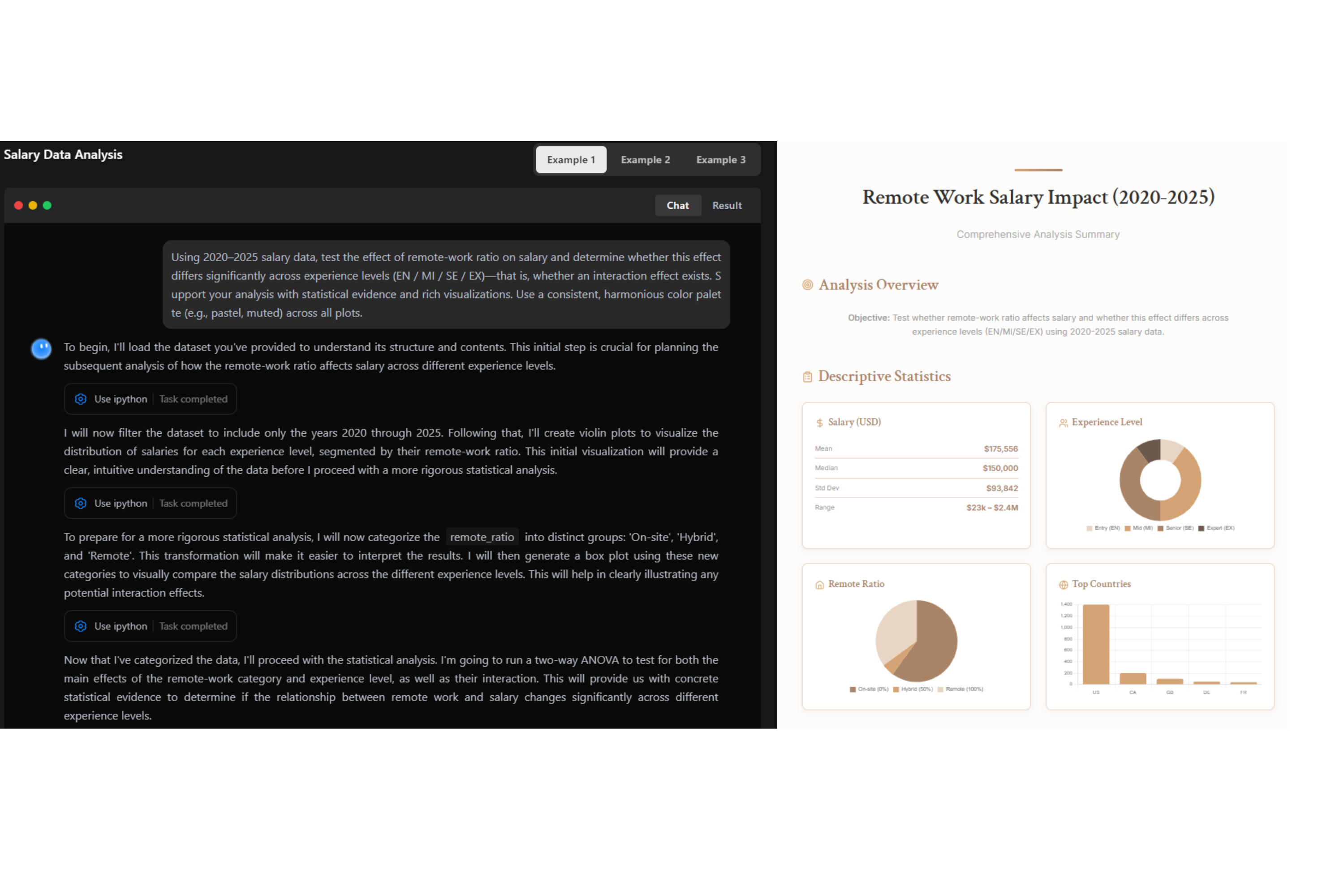

Salary Data Analysis

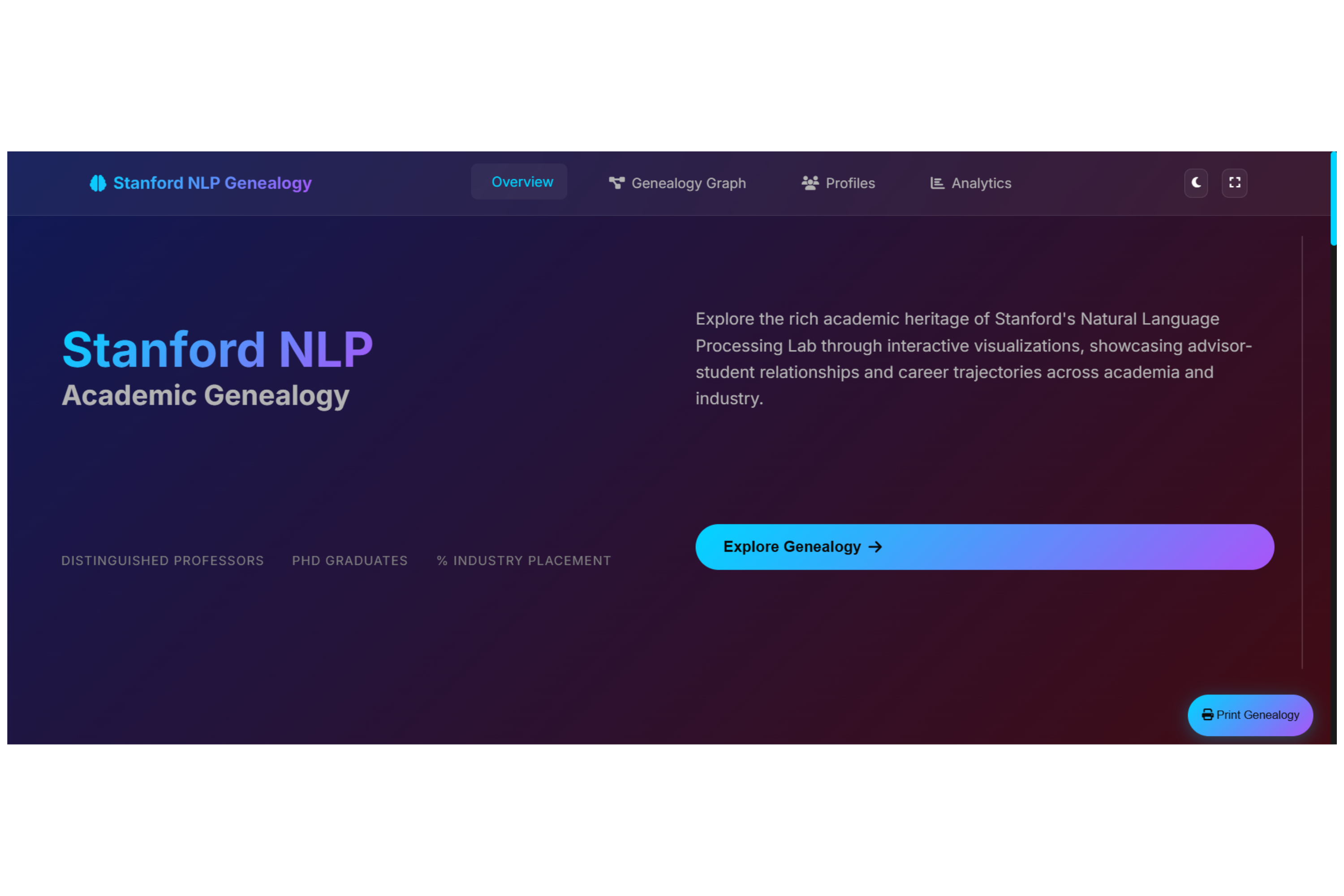

Stanford NLP Genealogy

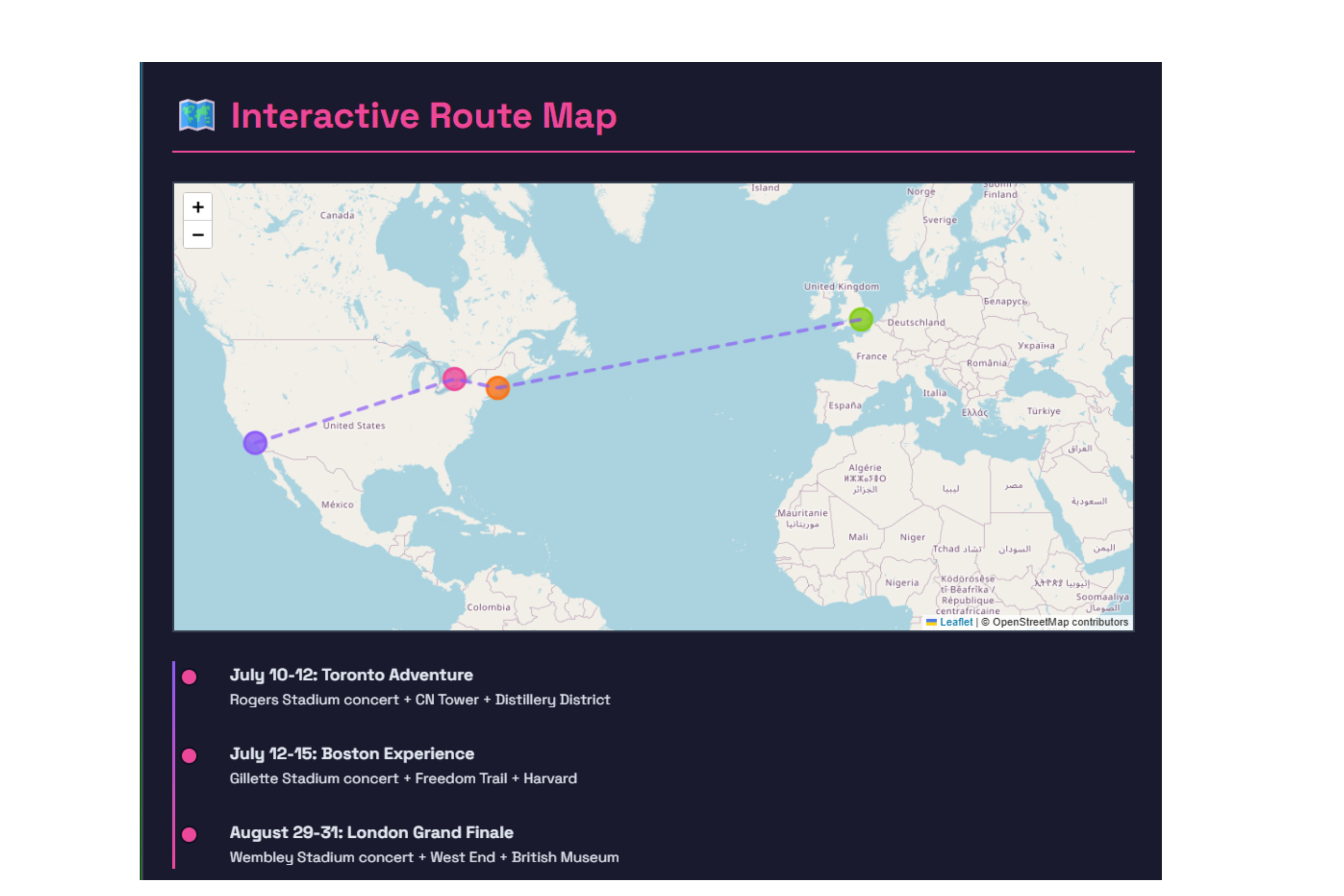

Tour Plan Design

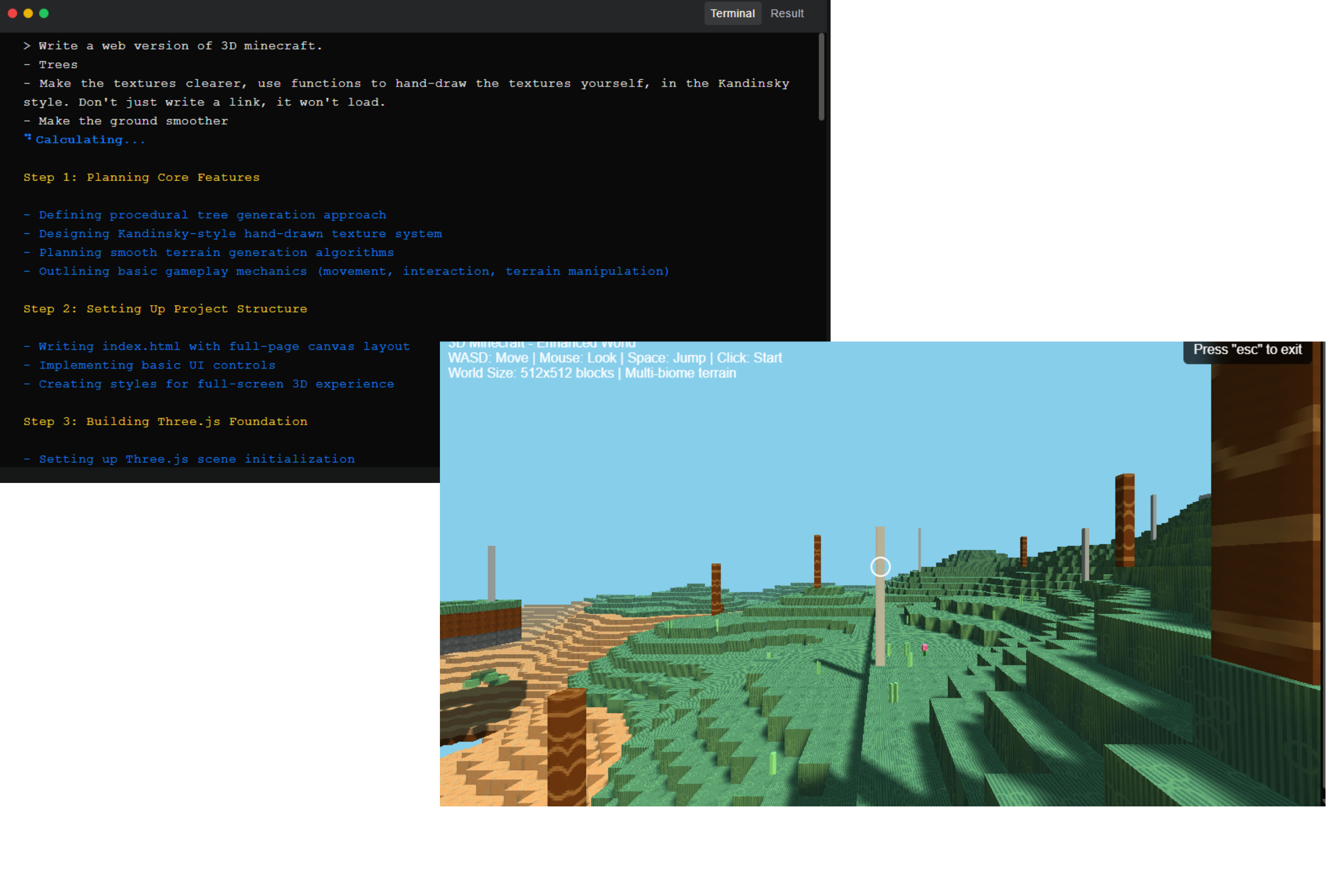

JavaScript Minecraft

Use Kimi K2 with API

For developers looking to integrate Kimi K2 into their applications, the Kimi Platform offers an OpenAI/Anthropic-compatible API, making it easy to adapt existing workflows. The API is particularly suited for building agent applications with advanced tool-calling capabilities.

Sign up for an API key at moonshot.ai.

Configure your application to use the Kimi K2 API, leveraging its compatibility with OpenAI or Anthropic interfaces.

Explore the tool-calling API to create intelligent agents for tasks like data analysis, code generation, or autonomous workflows.

Serve Kimi K2 on Your Own

For advanced users and researchers, Kimi K2’s open-source model weights allow for local deployment, offering full control and customization. The model supports several high-performance inference engines to ensure efficient operation.

Download the Kimi K2 model weights from Hugging Face.

Set up your preferred inference engine (e.g., vLLM, SGLang) following the engine’s documentation.

Follow the detailed deployment instructions provided in the Moonshot AI GitHub repository .

Optimize your setup for hardware compatibility, such as using two 512 GB N3 Ultras with MLX LM for 4-bit quantization, as demonstrated by users like Ani Hunan.

Frequently Asked Questions

What is Kimi K2?

Kimi K2 is an open-source Mixture of Experts model by Moonshot AI with 1 trillion parameters, optimized for coding, reasoning, and agent tasks.

How does Kimi K2 compare to Kimi 1.5?

Kimi K2 offers a larger parameter scale (1T vs. undisclosed), a longer context window (2M vs. 128k tokens), and enhanced agent capabilities.

How does Kimi K2 compare to Deepseek and Qwen 2.5?

Kimi K2 outperforms Deepseek V3 and Qwen 2.5-Max in coding and reasoning benchmarks like SWE-bench and AIME 2025.

Is Kimi K2 free?

Model weights are open-source and free, while API usage is priced at $0.15 per million input tokens and $2.50 per million output tokens.

What are Kimi K2’s main advantages?

Large-scale parameters, extended context window, open-source availability, and strong agent capabilities.

How can I access Kimi K2?

Use it online at kimi.ai or integrate via API at platform.moonshot.ai.